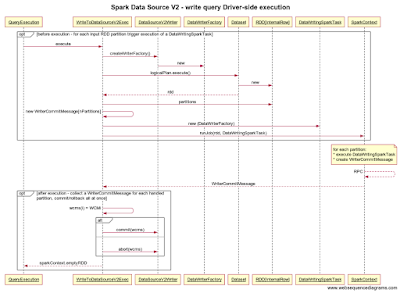

Before you can read anything you need to have it written in the first place. The previously considered read path is a little simpler but certainly shares a lot with the write path we'll delve into today. Initially I am going to concentrate on the basic flow and ignore predicate push-down and column pruning. As my running example I assume a trivial Spark expression that uses some existing Dataset such as "df.write.format("someDSV2").option("path", hdfsDir).save()".

A data source is represented in a logical plan by WriteToDataSourceV2 instance created by data frame writer. During planning DataSourceV2Strategy transforms the relation into a WriteToDataSourceV2Exec instance. The latter uses the Spark context to execute the plan associated with the Dataset. The resultant RDD determines the number of input partitions and so the number of required write tasks. In addition, WriteToDataSourceV2Exec helps with keeping track of WriterCommitMessages used to make writing data source partitions transactional.

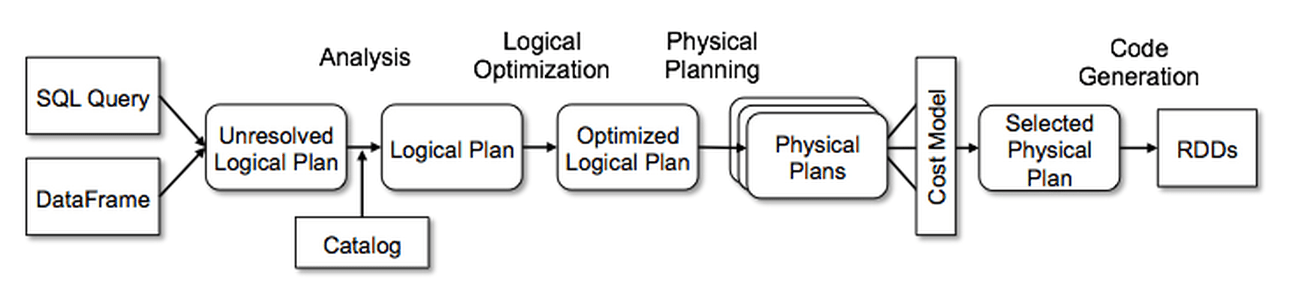

Query planning

In this phase the goal is to create a WriteToDataSourceV2 from a Dataset and client-supplied options and then transform it into a WriteToDataSourceV2Exec. The process is initiated by saving a Dataset. It triggers both logical and physical planning stages.

- DataFrameWriter searches for an implementation of the DataSourceV2 marker interface. The implementation is expected to also implement the WriteSupport mixin interface that is the actual entry point into writing functionality

- WriteSupport is a factory of DataSourceV2Writer instances

- DataSourceV2Writer can create a DataWriterFactory and is ultimately responsible for committing or rolling back write partition tasks

- WriteToDataSourceV2 is the logical plan node that represents a data source

- A QueryExecution is associated with the WriteToDataSourceV2 to help with coordination of query planning

- SparkPlanner uses DataSourceV2Strategy to transform logical plan nodes into physical ones

- DataSourceV2Strategy recognizes data source logical nodes and transforms them into physical ones

- WriteToDataSourceV2 carries a DataSourceV2Writer and logical plan associated with the Dataset; both are given to WriteToDataSourceV2Exec

- WriteToDataSourceV2Exec is the physical plan node that represents a data source

Query execution - Driver side

In this phase the goal is to obtain data partitions from the RDD associated with the Dataset and trigger execution of a write task for each partition. In this section we look at the Driver-side half of the process.

- WriteToDataSourceV2Exec creates a DataWriterFactory and a write task for each partition

- The Spark context executes the tasks on worker nodes and collects WriteCommitMessages from successfully finished ones

- WriteToDataSourceV2Exec uses DataSourceV2Writer to commit or rollback the write transaction using collected WriteCommitMessages

Query execution - Executor side

The second half of the execution phase takes place on the Executor side.

- DataWriterFactory is serialized to worker nodes where DataWritingSparkTask are executed

- Executor provides a DataWritingSparkTask with a Row iterator and a partition index

- The task acquires a DataWriter from the DataWriterFactory and writes all partition Rows with the DataWriter

- The task calls the DataWriter to commit writing and propagates a WriteCommitMessage to the driver