With the general idea of Spark Data Source API v2 understood, it's time to make another step towards working code. Quite some time ago I was experimenting with the original Data Source API. It's only natural to see how that idea would look after the major API overhaul. I make the same assumptions as before so it's more of a prototype illustrating key interactions.

Write path

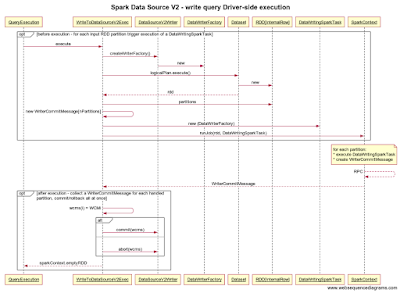

In the write path a new Dataset is created from a few data rows generated by the client. An application-specific Lucene document schema is used to mark which columns to index, store or both. The Dataset is repartitioned and each partition is stored as an independent Lucene index directory.

- Client - creates a new Dataset from a set of rows; configures data source-specific properties (Lucene document schema and the root directory for all index partitions)

- LuceneDataSourceV2 - plugs LuceneDataSourceV2Writer into the standard API

- LuceneDataSourceV2Writer - creates a new LuceneDataWriterFactory; on commit saves the Lucene schema into a file common for all partitions; on rollback would be responsible for deleting created files

- LuceneDataWriterFactory - is sent to worker nodes where is creates a LuceneDataWriter for every partition and configures it to use a dedicated directory

- LuceneDataWriter - creates a new Lucene index in given directory and writes every partition Row to the index

- LuceneIndexWriter - is responsible for building a Lucene index represented by a directory in the local file system; it's basically the same class as used in my old experiment

Read path

In the read path a previously created directory structure is parsed to find the Lucene schema and then load a Dataset partition from every subdirectory. Optionally, (A) the data schema is pruned to retrieve only requested columns and (B) supported filters are pushed to the Lucene engine to retrieve only matching rows.

- Client - loads a previously written Dataset from a given root directory

- LuceneDataSourceV2 - plugs LuceneDataSourceV2Reader into the standard API

- LuceneDataSourceV2Reader - loads Lucene schema from the root directory, prunes it to align with the schema requested by Spark; in case of predicate pushdown, recognizes supported filter types and lets the Spark engine know; scans the root directory for partition subdirectories, creates LuceneReadTask for each

- LuceneReadTask - is sent to worker nodes where it uses a LuceneIndexReader to read data Rows from the corresponding Lucene index

- LuceneIndexReader - wraps Lucene index access; pushes the predicates chosen by the LuceneDataSourceV2Reader to the Lucene engine

The first impression is that the new API is actually an API. It has a clear structure and can be extended with additional functionality. We can already see exciting work happening in 2.4 master.