Last time on Sheldon Cooper presents fun with flags we saw a lightweight alternative to ANTLR used to parse a simple query DSL. I was having too much trouble with another alternative so in the meantime I decided to finish a proper ANTLR version of the parser. As luck would have it, in ANTLR4 there is more than one way to write a parser. So for a low, low price of a single grammar file you get a parser written in the officially recommended way and the other following a more traditional approach.

Strictly speaking, there are two grammar files but if you squint a little you can see one was created by adding actions to a copy of the other. ANTLR4 makes an attempt to avoid actions in a grammar file to simplify the file and make possible parser generation in languages other than Java.

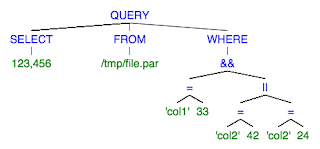

Instead of actions one can implement a parse tree listener interface. The good news is that it indeed allows better isolation between grammars and Java code. The bad news is that there is even more choice if you want to do something that requires sharing state between parser rules. Like building an AST.

Of the two simplest choices, I decided to use a manually maintained stack. It could probably be done with a visitor implementation returning AST nodes but the listener with a stack approach makes the juxtaposition with Parboiled even better. A new trick in ANTLR4 is using "# Something" snippets in the grammar to make ANTLR generate a listener method for every rule alternative.

The listener ended up looking more similar to the Parboiled parser than I had expected. That made me think about trying the traditional approach with actions embedded into grammar. At first glance, the listener approach looks cleaner indeed at no discernible cost.

ANTLR is still very good with skipping white spaces at the lexer level. No need to contaminate the parser rules with redundant checks everywhere. But to my surprise I actually found a couple other problems. To begin with, there is no easy way to make keywords case-insensitive. Secondly, I failed to write a parser rule that would return "unquoted" string values without breaking the ID lexer rule. I am probably not the only one confused with the latter. After some experimentation I simply settled on manually calling String::substring.

In addition to two spectacular books there is a lot of online documentation as well. The ANTLR maven plugin works seamlessly with no additional configuration so the gossip of "additional build process step" is widely exaggerated.

Between an easier to read grammar file and very powerful IDE integration ANTLR remains the leader. But from what I see the code size and complexity are roughly the same as for Parboiled. There seems to be the only 300K ANTLR runtime library. Parboiled has half a dozen dependencies (mostly ASM jars) of comparable total size.

So as a provisional conclusion, I don't feel strongly anymore that ANTLR is the only right answer to parsing needs in Java. I should probably run a performance test at some point but I doubt +-20ms is of real concern to most small DSLs consumers.